What do robots actually see?

Oct. 2, 2024

OpenCV line detection

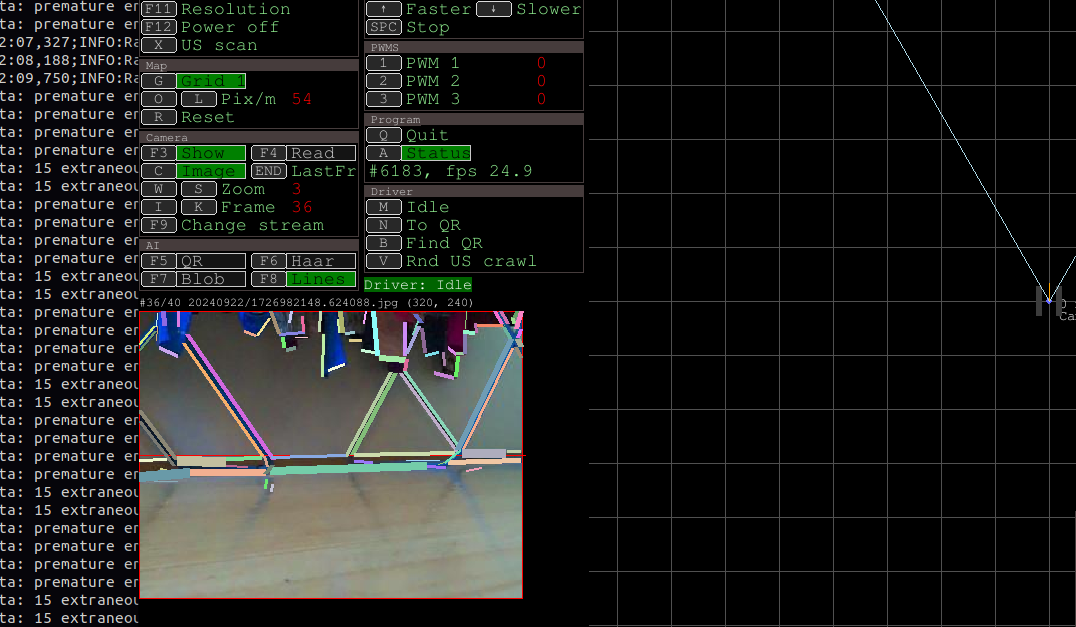

I was experimenting with a real time OpenCV line detection on a low-res picture (320x240 px) from Raspberry Pi camera V2 (default Tortuguita front camera)

You can see the results in a short youtube video.

I guess this is a fastest method OpenCV provides; I wasn't even able to detect latency (yet).

Another advantage is that is does need only low res picture, so you save (plenty of) time in transmission.

In Python, it is basically three lines of code:

# one time initialization (it takes some time):

self.line_detector = cv2.createLineSegmentDetector()

...

# line detection on actual frame

original_image = cv2.imread(self.last_frame.filename, cv2.IMREAD_GRAYSCALE) # grayscale gives best results

lines, widths, precisions, _ = self.line_detector.detect(original_image)

Despite that limitations, you can probably guess what is in the picture (colors are random). It seems there is a lot of fun you can do with that.

The goal I am aiming for now is some basic real-time orientation in space, based only on camera (no GPS, compasses or other sensors). So bot will be able to detect movement/rotation, and compute which direction it is heading.

The steps for that will be:

1) reduce the noise (simple filter for line length), then

2) "join" shattered straight lines to one (drop me a line if you can point me to some nice algorithm) and

3) finally, compare those "main orientation lines", like horizon, between frames and compute a movement from that.

All posts